It seems this new language (well 4 years is new for a language) from Google is worth a look, so I went ahead and did just that. The first thing one notices is that it was designed by Ken Thompson. The same Ken Thompson from Bell labs creators of Unix, and the “C” language.

First, the download for Windows-64 : https://code.google.com/p/go/downloads/list

Once that is taken of, we can get a feel for the beast by simply running go at the command line

Lets get started. Poking around the command-line is a fun-start, but we will want an IDE and look at some of the language features afterwards.

We can begin with creating the GOPATH environment variable and create a small helloworld application.

//Create hello world sample

C:\Users\mario>type C:\Users\mario\go\src\hello\hello.go

package main

import "fmt"

func main() {

fmt.Println("Hello, World")

}

//Compile helloworld

C:\Users\mario> go build hello

//Create helloworld binary executable under the bin folder

C:\Users\mario>go install hello

C:\Users\mario>dir go\bin

Directory of C:\Users\mario\go\bin

23/11/2013 09:12 PM 1,560,064 hello.exe

A rather large binary for a hello world console app…

Cute, but lets take a closer look at what some of the key features are. Creating a basic type and methods on that type is a good way of getting a feel for the syntax. We will create a new object named “pentest” with a TimeEstimated() method users can call to get and set a time estimate for the project.

The information you will need to get started is all on-line obviously, I recommend starting with the following:

- Follow golang on Twitter https://twitter.com/golang

- Find packages you will need to import http://go-search.org

- Great introduction http://golang.org/doc/effective_go.html

- Details on language specs http://golang.org/ref/spec

After some perusing of the above documentation, here’s the basic pentest type with just enough material to cover the fundamentals:

package pentest

import (

"time"

"fmt"

)

type PenTestType int

const(

internal PenTestType = 1

external

web

phishing

)

type PenTest struct{

pttype PenTestType

Description string

totalTimeEstimated time.Time

totalTimeTaken time.Time

}

func (p PenTest) getTimeEstimated() time.Time {

fmt.Println("Returning time:%s\n", p.totalTimeEstimated.Local())

return p.totalTimeEstimated

}

func (p *PenTest) setTimeEstimated(t time.Time){

fmt.Println("Setting time: ", t.Local(), "\n")

p.totalTimeEstimated = t

}

So importing is done with import, simple enough especially if one is familiar with Java.

Next I wanted to created a simple enum-like value for the type of pentest, this is where the language syntax gets funky. It does take some getting used to, certainly if your background is C/C++ where enum’s are more simply defined using the enum keyword.

So far nothing too unfamiliar, except for the type. Gone is the class keyword from Java or C++, Go doesn’t have it! Instead we ill use the keyword type to describe our user-defined type.

And what’s this, no private or public function access specifier? And why are the members defined outside of the type? Heresy! How are we to control access to our object? Lets proceed, perhaps there are some semantics in the language to handle this.

First thing to notice when creating these types with members-outside-type is the syntax, it is backwards from Java and C++,

- m_SomeMemberVariable type

- func(param_Variable type)

Expect syntax errors initially, especially if your memory muscle is used to the old foo(int i) syntax. Writing the “class” methods outside the class is going to take some adjustment time. Sure, this is similar to having members defined in header files and implemented in .cpp files, except that in GoLang you define all the members outside of the type. Essentially, it’s as if C++ members were written, and only written, outside of the header file, with the addition that you pass the class’ this pointer to each function.

Syntactic sugar aside, lets try to use our class.

Let us use the PenTest type as it stands..here’s the main file:

package main

import (

"fmt"

"pentest"

)

/* mario@superconfigure.com*/

func main() {

fmt.Println("Hello, World"); // This is a comment

p := pentest.PenTest{}

p.setTimeEstimated()

}

Simple enough, except the line highlighted in red above doesn’t compile. So what’s the problem? The line highlighted in red above does not compile due to: “cannot refer to unexported field or method”

Looks like we need an access specifier, but how?

Hmm, what if we modify the function signature slightly in our pentest.go file:

func (p *PenTest) SetTimeEstimated(t time.Time){

Now it works,

p := pentest.PenTest{}

t := time.Time{}

p.SetTimeEstimated(t)

So the fact that the first letter in the method is a Capital, ‘S’ vs ‘s’, identifies it as public !

Cool stuff, but serious development is going to require an IDE, and a debugger.

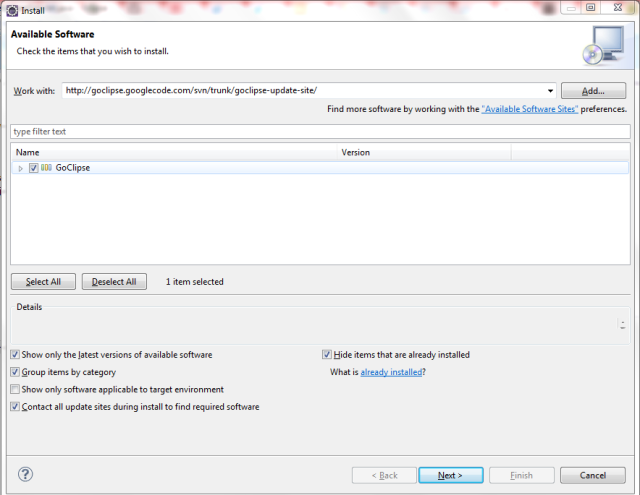

After looking around I found a GoLang Eclipse plugin named GoClipse. Install it via Eclipse via: Help – Install New Software, enter http://goclipse.googlecode.com/svn/trunk/goclipse-update-site/

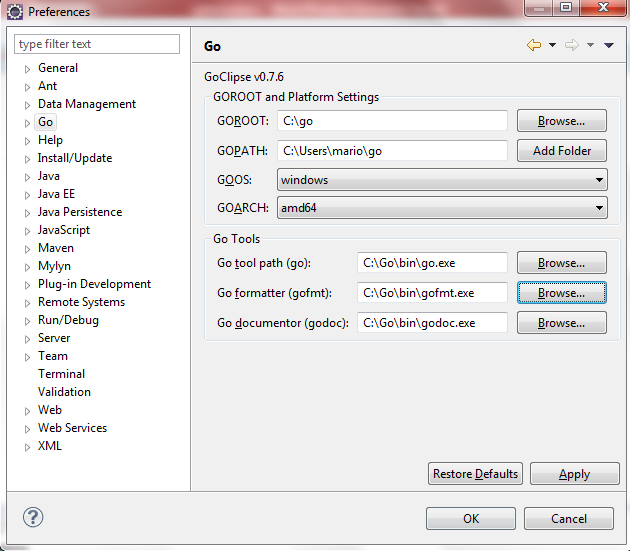

Configure the GoClipse settings in Eclipse:

To debug your apps you will need to download the GDB debugger, here’s the on eI am using: ftp://ftp.equation.com/gdb/snapshot/64/gdb.exe

I have just begun looking at the possibilities, but expect a language with this level of pedigree, and backed by the behemoth of Google, can only have a growing adoption rate.